Atom brands itself as “A hackable text editor for the 21st Century”. I first used Atom in August 2014 as an alternative to the Kate editor that comes with Ubuntu and which I had used for many years before that. I was drawn towards Atom by all the publicity around it on Hacker News, and it seemed to have momentum and a few useful features that Kate was lacking. It didn’t take me long to find myself using Atom exclusively.

Much is said online about Atom being slow to respond to key presses, or that it’s built in JavaScript, or that it uses a Webview component to render everything, or some other deeply technical topic, but none of those topics are worth discussing here.

Instead, a key feature for me is that Atom is built on an extensive a plugin architecture, and ships with a bunch of useful plugins by default. There are hundreds more available to download, but trawling through the list is a pain and there’s always a few gems buried in the long tail. To save you some hassle, here’s a list of the plugins that I use. I’ve divided them by the three main tasks for which I use Atom regularly – cartography, coding and writing.

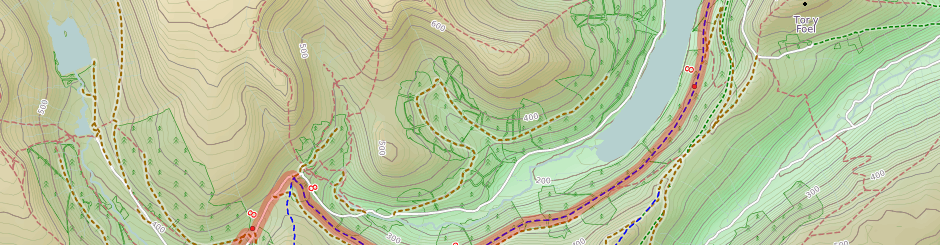

Cartography

language-carto – “Carto language highlight in Atom”. I only found out about this recently from Seth but, as you can imagine, it’s very useful when writing CartoCSS styles! Finding out about this plugin by chance conversation is what sparked the idea of writing this post.

color-picker – “A Color Picker for Atom”. I use Atom to edit all my CartoCSS projects, so this seems like a good idea, but I haven’t actually used it much since installing. I prefer Inkscape‘s HSL colour picker tab when I’m doing cartography, and working in Inkscape lets me quickly mock up different features together to see if the colours balance.

pigments – “A package to display colors in project and files”. This simply highlights colour definitions like #ab134f with the appropriate colour – almost trivial, but actually very useful when looking at long lists of colour definitions.

Development

git-blame – “Toggle git-blame annotations in the gutter of atom editor”. I only started using this last week and it’s a bit rough around the edges, but it’s quicker to run git-blame within my editor than either running it in the terminal or clicking around on Bitbucket and Github. Linking from the blame annotations to the online diffs is a nice touch.

language-haml – “HAML package for Atom”. I was first introduced to HAML when working on Cyclescape and instantly preferred it to ERB, so all my own Ruby on Rails applications use HAML too. This plugin adds syntax highlighing to Atom for HAML files.

linter – “A Base Linter with Cow Powers”, linter-rubocop – “Lint Ruby on the fly, using rubocop”, and linter-haml – “Atom linter plugin for HAML, using haml-lint”. I’m a big fan of linters, especially rubocop – there’s a separate blog post on this topic in the works. These three plugins allow me to see the warnings in Atom as I’m typing the code, rather than waiting until I save and I remember to run the tool separately. Unfortunately it means I get very easily distracted when contributing to projects that have outstanding rubocop warnings!

project-manager – “Project Manager for easy access and switching between projects in Atom”. I used this for a while but I found it a bit clunky. Since I’m bound to have a terminal open, I find it quicker to navigate to the project directory and launch atom from there, instead of clicking around through user interfaces.

Writing

Zen – “distraction free writing”. This is great when I’m writing prose, rather than code. Almost all my writing now is in Markdown (mainly for Jekyll sites, again, a topic for another post) and Atom comes with effective markdown syntax highlighting built-in. I’m using this plugin to help draft this post!

That’s it! Perhaps you’ve now found an Atom plugin that you didn’t know existed, or been inspired to download Atom and try it out. If you have any suggestions for plugins that I might find useful, please let me know!